Inter-observer variation can be measured in any situation in which two or more independent observers are evaluating the same thing Kappa is intended to. - ppt download

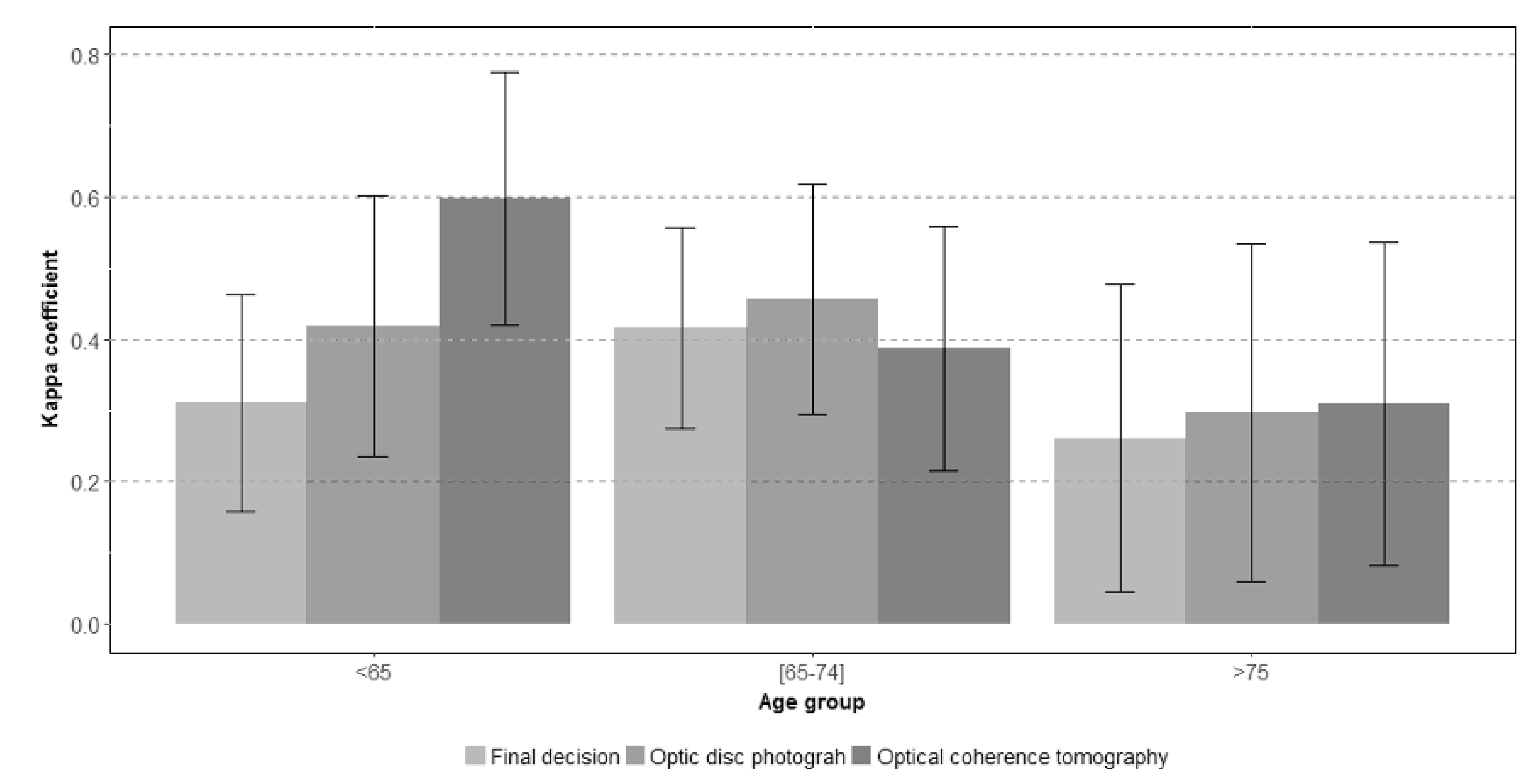

JCM | Free Full-Text | Interobserver and Intertest Agreement in Telemedicine Glaucoma Screening with Optic Disk Photos and Optical Coherence Tomography | HTML

Inter-observer agreement and reliability assessment for observational studies of clinical work - ScienceDirect

GRANT EDRS PRICE DOCUMENT RESUME Interobserver Agreement for the Observation Procedures for thi DMP and WDRSD observers. Wiscons

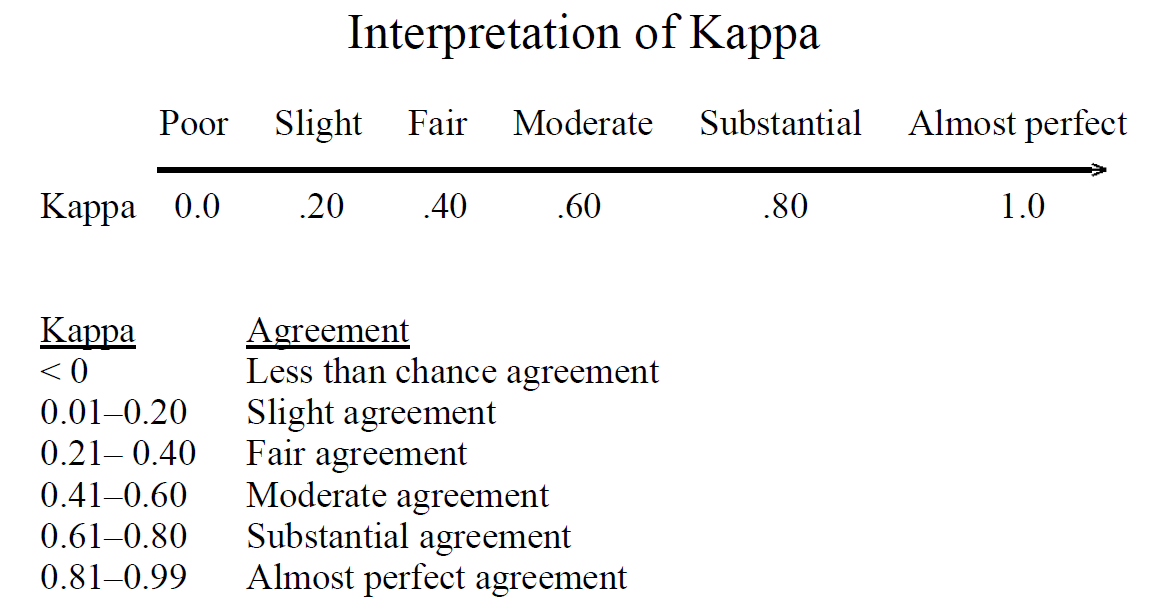

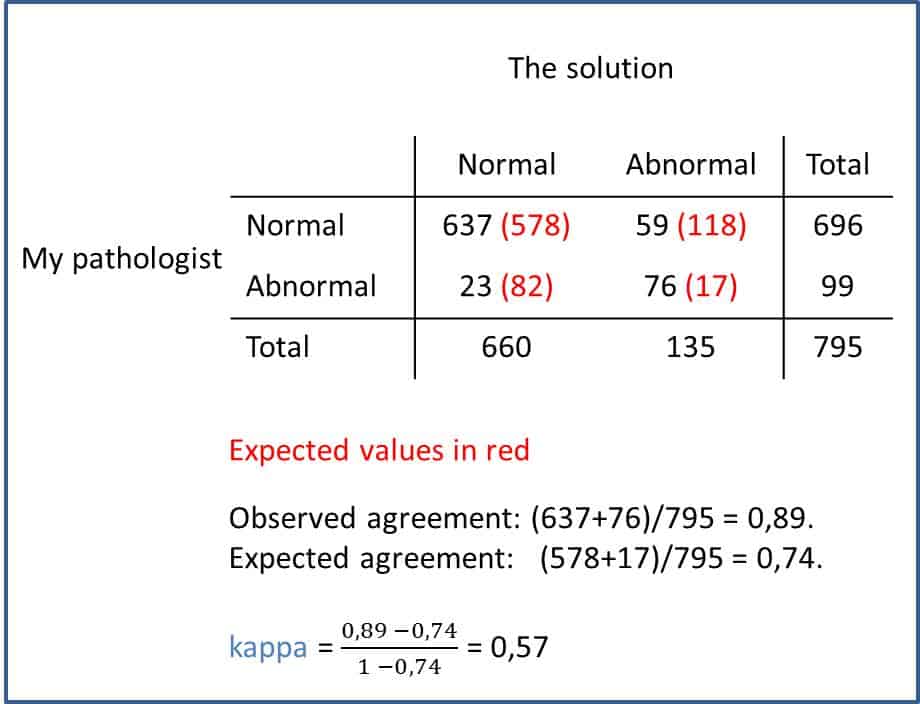

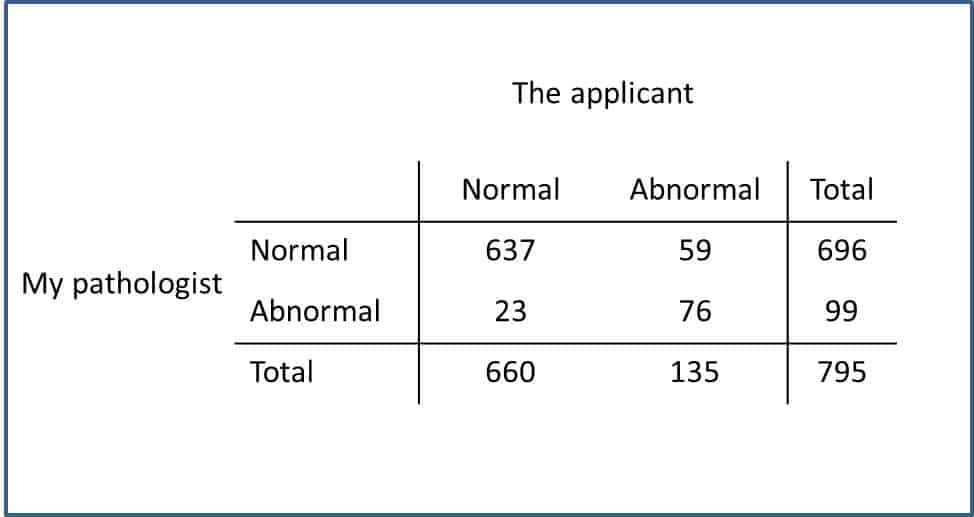

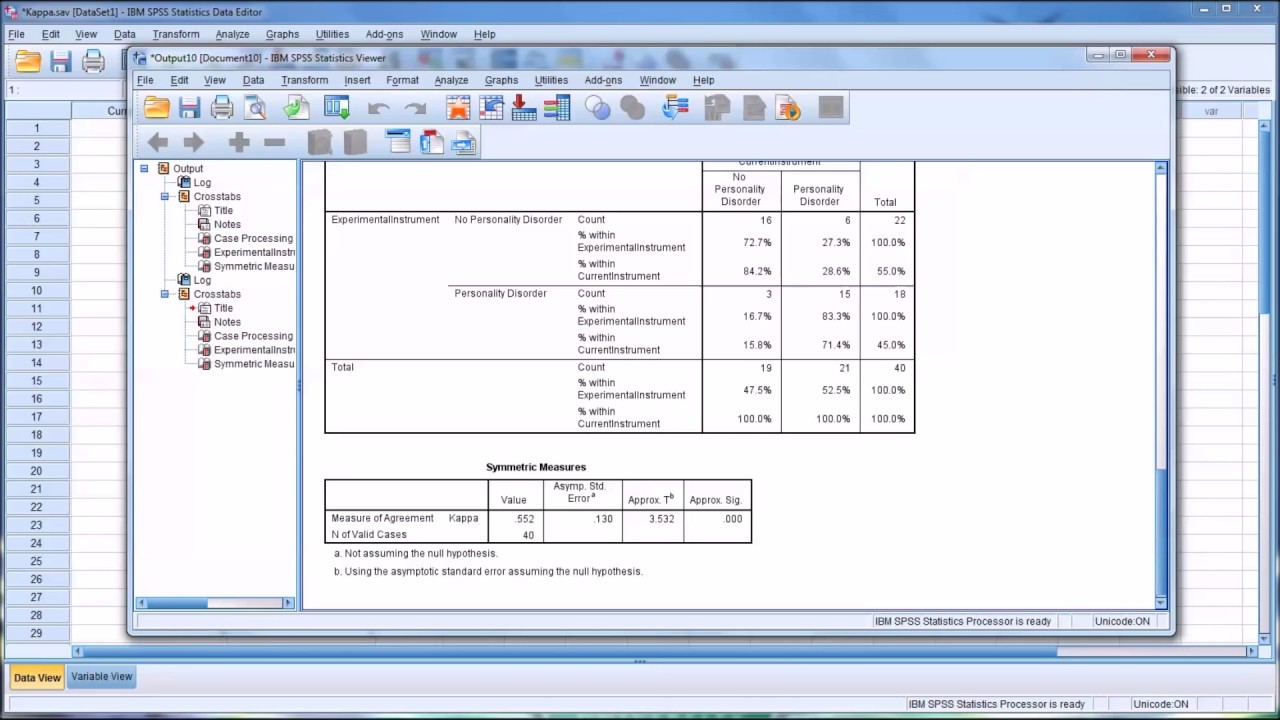

Understanding the calculation of the kappa statistic: A measure of inter-observer reliability | Semantic Scholar

Understanding the calculation of the kappa statistic: A measure of inter-observer reliability Mishra SS, Nitika - Int J Acad Med

Kappa values for interobserver agreement for the visual grade analysis... | Download Scientific Diagram

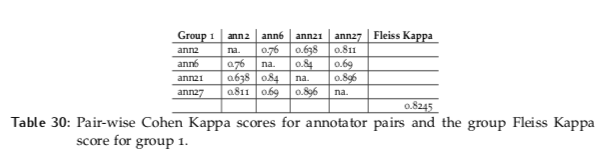

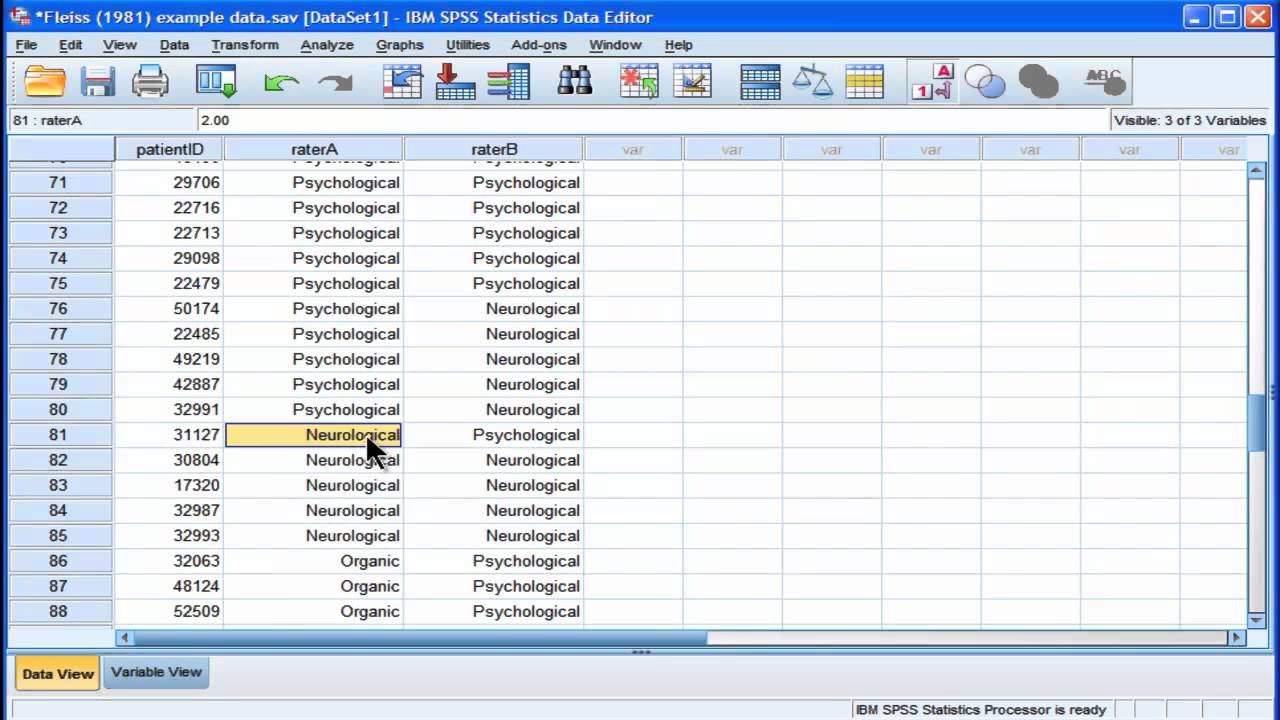

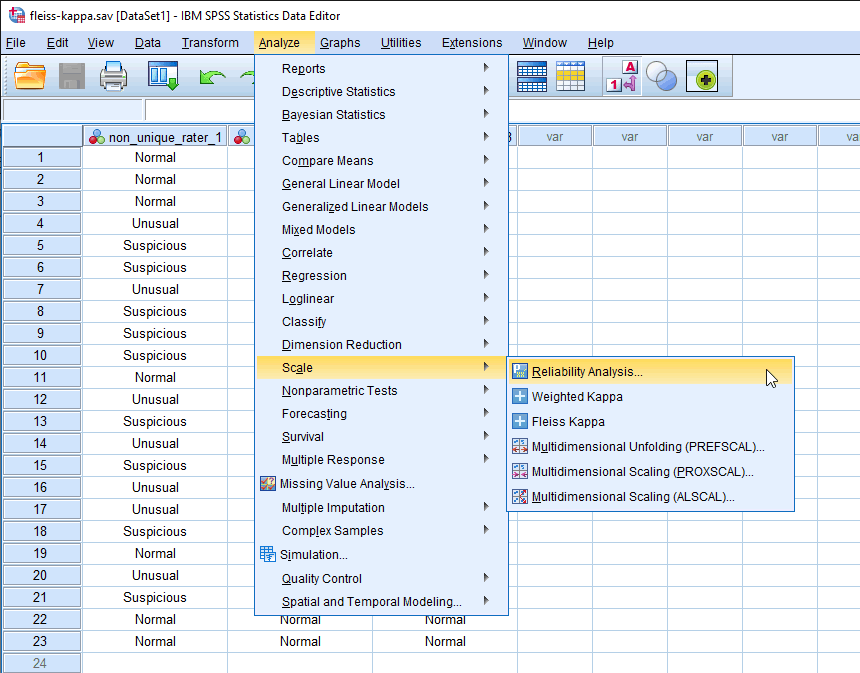

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

![PDF] Understanding interobserver agreement: the kappa statistic. | Scinapse PDF] Understanding interobserver agreement: the kappa statistic. | Scinapse](https://asset-pdf.scinapse.io/prod/1444168786/figures/table-3.jpg)

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table3-1.png)